Nothing in it could exist without the presence of the other: interconnectivity in biological entities. Fungal flora and soil structure depend on the robot to nurture them; the robot relies on their existence to move. The interconnectivity and performativity of all elements generates ambient sound and a visual interface uncovers the otherwise invisible stream of impact and growth.

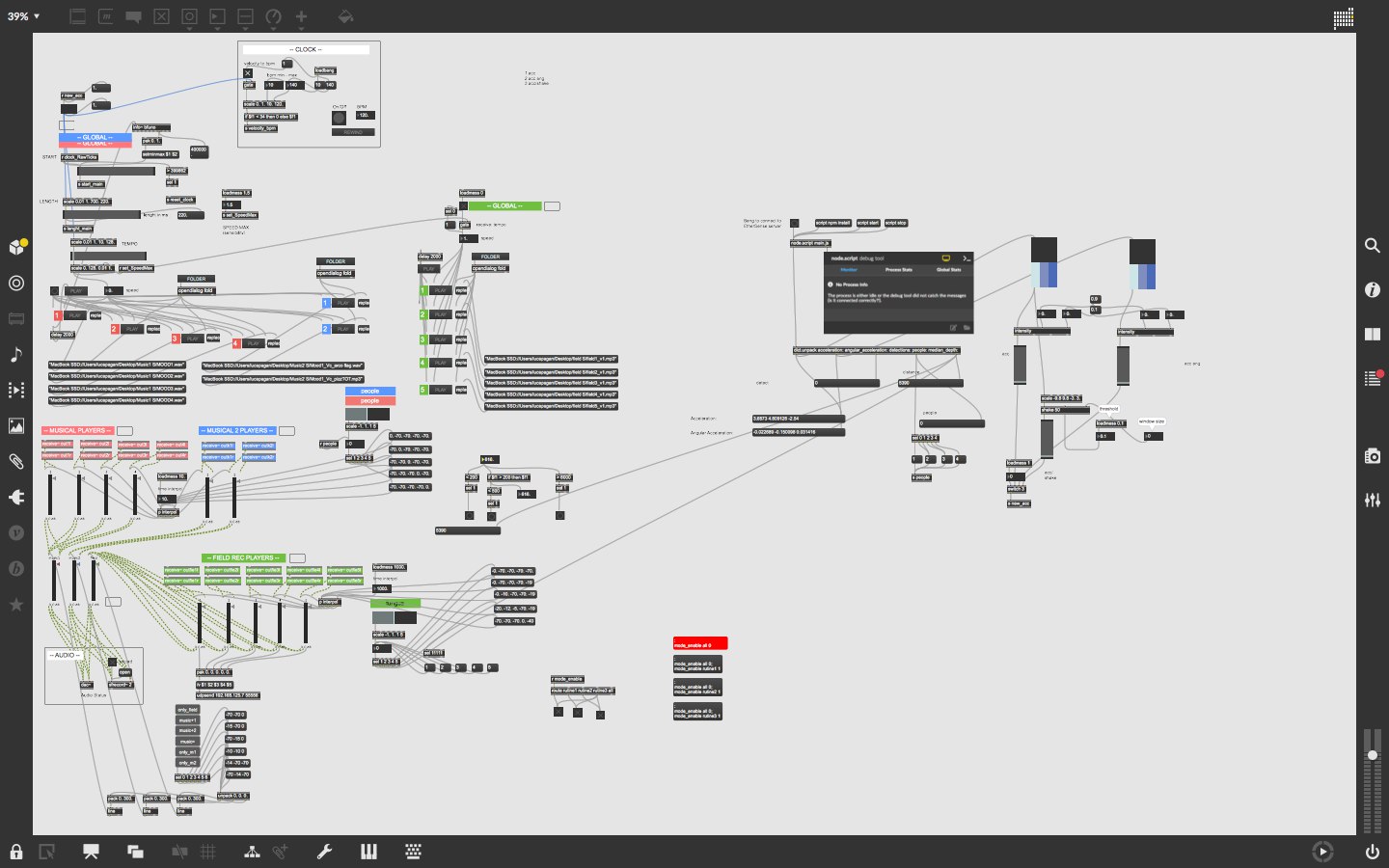

The sonification of MQ is based on a real-time responsive system, which interpret inputs from the robot, the soil and the crowd to build a choreographic and immersive atmosphere. AI generated soundscapes (trained on hours of field recordings in different natural environments) build an ever-changing sound design.

The changes of distance between the robot and the soil disclose different layers of “voices” from the garden. On a musical level, a granular sampling-based custom system highlights the movements of the robot. Changes of speed, rotations and movements result in a synchronised, generative music. Also the crowd interfere with music generation, thanks to a simple YOLO algorithm, wich detects the audience in the room and produce intimates atmosphere when a few people is watching, and louder sounds when more people is interacting.

Following MAEID’s radical approach to artificial ecologies, the sonification lies somewhere in between carefully designed soundscapes and unpredictable machine behaviours, and tries to give “visibility” to the hidden events in the living artwork.